Okay, so a lot has happened over the last month!

Early this month I started to dive a bit into how we are going to stitch our images together. I approached this through OpenCV so that I have access to python libraries like numpy. At its core this is taking a directory of images as an input, detecting key points, matching descriptors and features as a vector, before processing them through homography and warping the images to fit. The results are PRETTY good right now, but could be better. Ive since spent quite a bit of time working with the code getting it to accept more images on the first run.

The Earlham College poster fair was also earlier this month and I wrote up and designed the poster detailing some highlights in our research, a pdf of the poster can be found here –> uas-poster-2016.

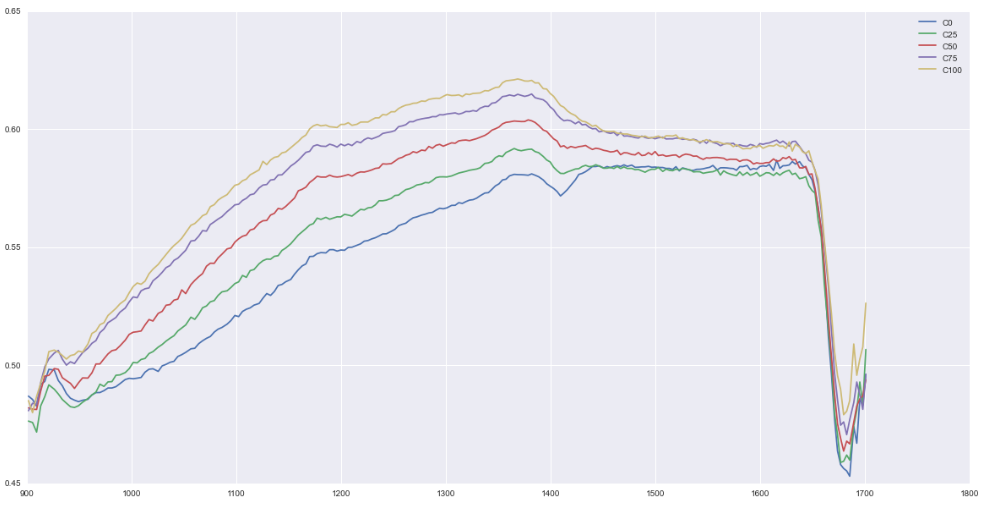

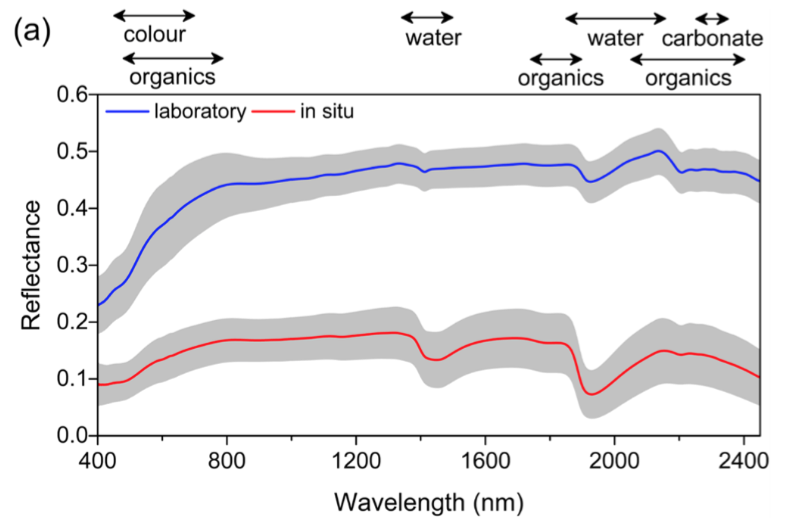

I suggested that Vitali and Niraj use OpenCV for object detection of archaeological sites. Ive also started to look into the different ways in which things like animal activity and vegetation health might be done through computer vision as well. Interestingly enough, vegetation is not only easy for computer vision to find but to also get rough calculations of how nitrogen heavy the plants are.

Ive continued to work with UGCS’s open software packages. I recently got integration of custom map layers (i.e., GIS) to appear in the flight program. As the majority of the sdk comes undocumented it feels a lot like reverse engineering and takes more time than I would like.

This weekend I had the intentions to fly a programmed flight over our campus to make a high resolution map that Charlie had asked me to do. I’ve since run into networking problems though and cannot proceed further until I get them figured out. I cannot get an uplink to the UAV but can receive download and telemetry data. This is mostly a networking error I suspect but one thats continuing to confuse me.

Moving forward: First off my plan is to get the ground station working correctly again and this is my highest priority. Second off, while Niraj and Vitali handle the computer vision software I will start familiarising myself a bit more with QGIS.