Since my last post was a a very long time ago, this is what has happened with the data clean-up over the past weeks:

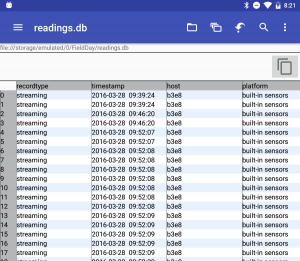

Eamon and I both cleaned up all the data we could find individually. Since we’d worked separately, when we compared the cleaned data sets that we each had, they turned out to be different. Neither of us had all the data individually, but when our data sets were combined, the list was exhaustive of all permutations of Iceland 2014 and Nicaragua 2014 data. Then, with Charlie’s help, we were able to determine which of the data sets we needed to zorch. It turns out that a significant chunk of our data sets needed to be zorched, because we each had thousands of rows of testing data or data taken in the car while the group was driving.

After much too much time wrestling data in spreadsheets, the readings table is finally in the field science database where further clean-up relating to sectors and spots can be done. As of right now, most Iceland 2014 data has no sector or spot data. With Charlie’s help, I can now populate the sectors.This should be way quicker to do by date, time stamp and lat long coordinates now that we have it all in the database.

Next up, I will be working to create/adapt a function that measures the distance between a pair of lat long coordinates. This should help with further clean-up and with the bounding box interface.