Now a few weeks into the new semester its time to stop procrastinating and get back to work!

Ive started off by essentially doing what seems like mass updating of hardware, software, firmware, etc. The DJI drone system and ground control software both had to be brought up to date. Initially there were a lot of errors in getting them talking to one another again but as of Wednesday this week they are fully happy and working properly. I’ve also taken the chance to update the plug-ins and various parts of the website. Ive also made accounts on the blog for Vitalii and Niraj who will be joining us by working on some of the back end coding.

In an effort to stream line our massive photo cache I’ve copied all photos onto our media server here on campus to allow us to situate things. After talking with Charlie and Erin we have decided to sort initially by day and then by capture device. This means though that we have to map all photos to their correct day which has proved to be more difficult then I thought… If the EXIF data on the images doesn’t contain a date – the date is just set to be the most recent “creation date” which means when I copied them. Thats left me and Erin with a whole lot of images that need to be sorted and sifted by hand still. Im also still finding places where images are stored, such as the google drive, etc, and have been working on getting all of those to the server as well. Its sort of like a big game of cat and mouse and is tedious at best.

With Niraja and Vitalii joining us I have also been doing my best in catching them up to speed on all the projects and details there in. Much of this involves working with the UAV images and how to post process them. Much of this is trying it out on proprietary software and then figuring out to best do it in large batches and with open source programs. Ive most recently been trying to figure out if its possible to get a 3D topographic image without needing to apply a LIDAR point cloud layer. While not as accurate it would certainly be quick and would be used more as an approximation.

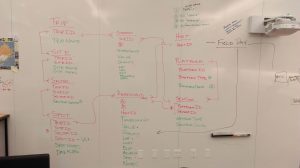

As time becomes available, Ive also started going through all the video footage that we have saved and started to throw together a very (VERY) rough storyboard for the documentary. Im hoping to have a solid chunk of time this weekend to work on this a bit more. Really I’m just focused on getting some generic shots worked together so that we can use them as filler for voice over and context.

As things move along I’ll keep posting! Cheers!