Since my last blog post a very long time ago a lot has happened. Here is a breakdown by platform and by sensor:

Near IR spectroscopy: the rise and fall of the SCIO

At the time of my last post, I was eagerly awaiting the arrival of the SCIO. The machine itself was something of a disappointment. Under the hood it’s basically a tricked out CCD camera. The raw data is also completely closed. This was kind of a disappointing realization, but we rallied. Instead I am planning to try out Texas Instrument’s DLP (R) NIRscan (TM) Nano. It’s a bare bones, open source spectroscopy platform that covers an impressive 900-1700 nm range. In the meantime, Stephanie, Mike and I have been developing calibration curves for the Nano with the FTIR. The results thus far have been very promising – we have identified a peak in the IR spectrum that varies almost linearly with organic content concentration. We are also planning to take spectral data from Icelandic soil samples currently residing in Heather’s ancient DNA lab. We will follow sterile protocol and remove a small amount of soil for the FTIR scan.

I have also considered the idea of building a visible/NIR spectrometer. This would allow us to take spectral readings from the visible and IR ranges of the electromagnetic spectrum and cover more possible organic content peeks. The hardest part about building a spectrometer is not actually the optics but powering and reading the CCD. I have found a linear NIR CCD and possible arduino code for driving it, but I’m not sure if I will have time to optimize it before we leave, so I’m back burner-ing it for now until I progress more on color conversion and OC sensors.

Munsell Color and PH

Using an RGB sensor to get an approximation for Munsell color would save us time on comparison and data entry. A vis spectrometer could also corroborate Munsell color values, if I were to build one.

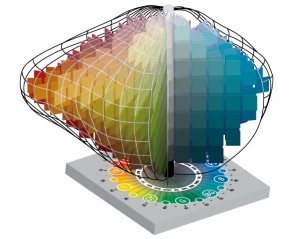

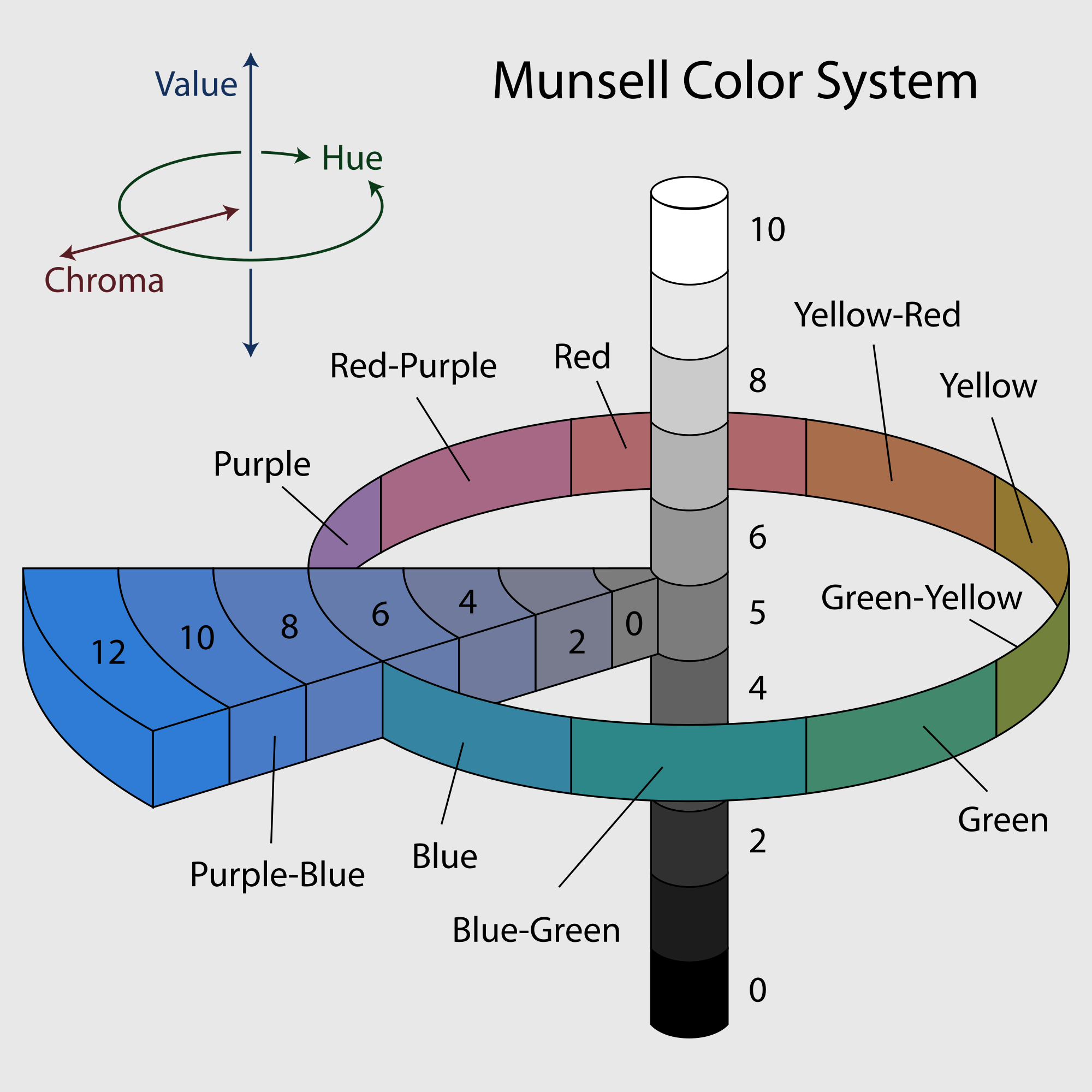

This week I have been learning a lot about color spaces. The RGB sensor takes values that live in RGB space. Munsell color space is a 3D space with axes (value, hue, chroma) that looks like this:

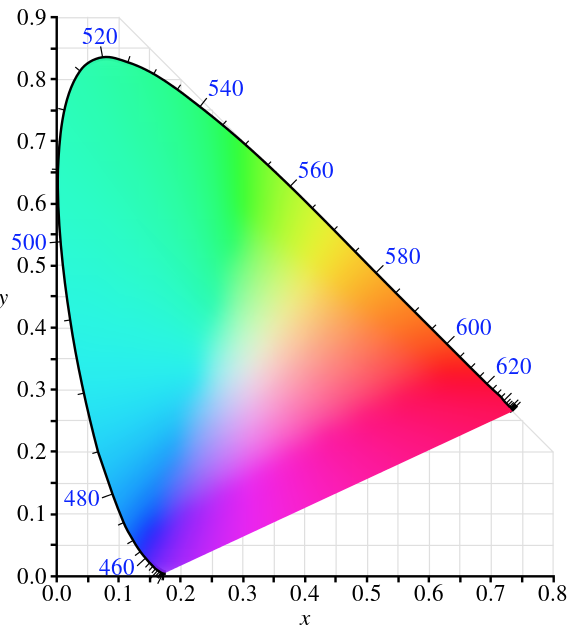

For Munsell color I have a table from RIT that converts MVH to XYZ, the colorspace bounded by spectral colors that describes colors the human eye observes, and the conversion to RGB from here is published. I will just be going backwards.

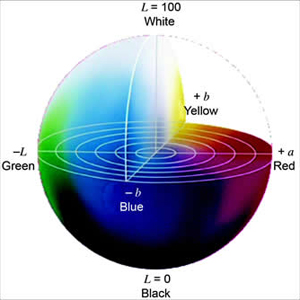

For PH I am interested in color difference. I might be able to use XYZ like the Munsell, or I might use La*b* which is optimized for computing differences.

Field Sensor

The field sensor contains IR temp and soil moisture (and now i’m thinking conductivity as well). This is where I am trying to jump in with BLE. These sensors just need to pass an int or a tuple to field day. I am using a redbearlab BLE shield. The world of arduino is all pretty new to me and still confusing, but I’m making progress.

I am also jumping into openSCAD to re-design the field case to accommodate the BLE shield and IR sensor.

OC Meter

I gave up on the idea of building a scanning laser with a stepper motor. I think I can accomplish enough precision with an array of white LEDs, an array of photodetectors, and a black box. Redesigning now.