I have accomplished quite a bit within the past week, if I do say so myself. Last week, I said that I had begun working on the BLE part of Field Day. Well, after some struggle I finished it! I am able to send a request to the Arduino device and upon receiving that request, the Arduino device sends a message back to the android device and writes to he screen (woo!). But the code is not pretty. Android provides a Bluetooth Low Service example in Android Studio, but it uses deprecated code. I researched and was able to modernize it.

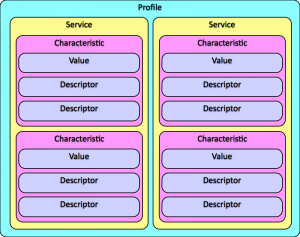

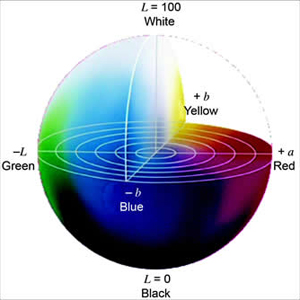

Bluetooth Low Energy is pretty complicated as a service. It uses something called GATT (Generic Attribute Profile). The bluetooth server (arduino device) has a GATT profile and within that GATT profile there are services and services have characteristics. Each service can have multiple characteristics, but there can only be one TX and one RX (read and write) characteristic per service. I learned this the hard way. You can see a diagram of what I’m talking about below.

For the arduino side, we use Red Bear Labs BLE shield. There is a standard BLE library for the arduino but it’s really complex. I’ve read over the code multiple times and I’m just now grasping a little bit of it. RBL has constructed a wrapper for that code. They broke it down into 10 or so different functions, which I must say is mighty useful. I used that when constructing the Arduino code.

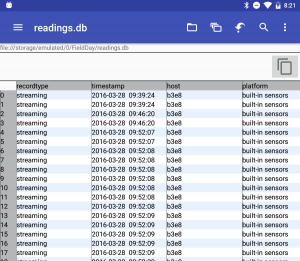

During my coding of the BLE on android I discovered that most of our Fragments of sensors are all the same except for the names of them. After talking with Charlie we’ve decided that we’re going to get rid of the individual fragments for each sensors and just have one that is ‘Bluetooth Sensors.’ I’m working on moving the BLE code to that setup now. Hopefully I will be done with it within the day.