Kristin and I wrote a long email to Oli @ Skalanes with a bunch of answers and questions for him, his reply arrived this morning. Spoke with Tara about soil sampling protocols and parameters, Erin about infrared imaging for the nest sites and scaling aerial imagery, and Deeksha and Eamon about data models and data conversion. We also talked about where all the various older sets are that need to be harvested and brought into the central data store on the field science machine.

Skalanes Nesting Birds

After receiving Bernard Lundie’s information, I was able to make headway in nesting birds. He sent me a complete document that lists the birds he know that have nested around Skalanes including waterfowl, grouse, waders and passerines. Eider (waterfowl) and Arctic Terns seemed to be most common in terms of nesting. There is an extensive list, so know narrowing down a specific research question involving the survey of nesting birds will be key, because there are so many species. Do we look just more extensively at nesting arctic terns or do we just survey nest totals in the area, disregarding species. I also spoke with Earlham’s ornithologist, Wendy Tori and she sent me several papers on arctic terns. We talked a little bit about methods but she has never done anything quite like this. The final challenge this week has been getting the thermo camera. Still an issue that might take some more time.

Joys of Reconciliation

I’ve mainly spent time working on identifying the specific differences in the formats of data we’ve used for the last two years.There are some differences between Iceland 2014 data and Nicaragua 2015 data,and even more significant differences between Iceland 2013 data and the rest of what we’ve collected.To be able to start putting together the pieces of what we have and effectively cleaning them up,we need to locate and tag everything we can find,which is what I’ve been upto.

I now have a master table of sorts of Iceland 2014 data and Nicaragua 2015 data,so I know what/where we need to add/modify so as to have a consistent pattern in our data.

I’ve been working on what additional functionalities we want our data model to accommodate before we go back,and how to fit in the pieces of whatever we do have into the data model we decide is best this year.

Web Development done Python-style

Over the past couple weeks I’ve been looking into lightweight frameworks for doing web development. I’ve been experimenting with Flask, which is amazingly simple.

I did some python/bash scripting to manipulate data left over from Iceland, and cleaned it up so it would all fit into one database schema. This should be updated later to comply with some kind of standard we all decide on.

I’m beginning to like the idea of developing our data interfacing software under an api-based model of programming. I think it is beneficial to decouple the database from the front end. I’m considering developing a restful api that returns data in a JSON format that can be interpreted and displayed on screen by some kind of JavaScript-based web app. This may also give us the flexibility to later integrate data into Android or desktop apps as well.

One of the big tasks yet to do is to figure out how to send the data between the client application and the database server. Does anyone have any ideas as to how to do that?

Lasers! (And also other sensors)

Scanning optical organic matter sensor

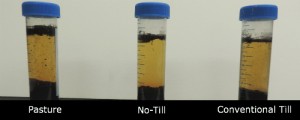

Organic content can be measured in a variety of ways. The most accurate tests involve measuring the amount of combustable carbon-based matter in a sample by putting it in a chemical oven. In a test like the one pictured below, soil is allowed to sit undisturbed in a falcon tube for a period of time in which the available organic matter floats to the top.

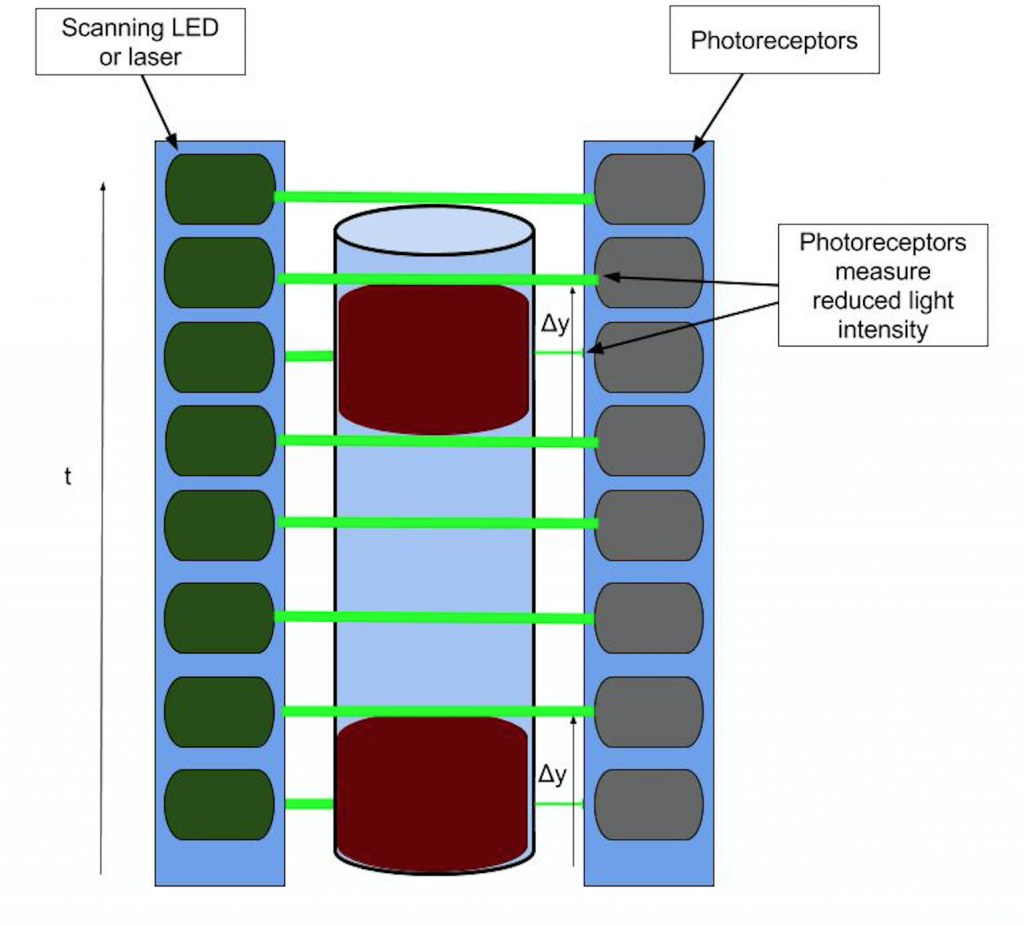

I would like to use a combination of a laser and a photometer to make a scanning optical device that moves upward from the bottom of the tube. An arduino would log the values of intensity of the light that permeates the tube. The intensity would be reduced for the section of the range that is obstructed by organic matter (or mineral matter at the bottom). I will write software to measure the length of this band and then calculate the volume from this value (thickness*pi*r^2). This method would be faster and more consistent than measuring each band individually by eye.

Electrical Conductivity & PH Sensing Platform

This platform will also be designed for bench top use because soil samples need significant time to stabilize before accurate readings can be collected. I am focusing on sensors that connect via BNC because they seem to be the cheapest option for arduino interfacing.

The PH interface will be based on this implementation from Sparky’s Widgets (a great all around resource for electrode sensing applications).

I will try to build this interface for the pH probe first and then see how easy it is to modify to read EC. Sparky’s Widgets offers an integrated solution for purchase, but I would like to reengineer it myself if possible.

Temperature and Moisture Field Sensor

This is the only sensor platform I am designing for field use. Right now I am thinking I will design the temperature portion of the sensor to be used right after the soil core sample is taken. I am planning to use infrared sensing in the resulting ~10cm hole to measure soil temp. There are a lot of options out there for infrared sensing – here is a sparkfun sensor I am looking into. I need to get an idea of what precision is necessary for this application.

For moisture I am planning to use a very robust sensor from Adafruit that has been used by students in the past. Building the circuit for this sensor might be a good place to start since I have models to work off of.

NPK and Munsell Color Optical Sensor

A lot of soil nutrient tests involve chemical reactions leading to color changes. I am interesting in using RGB sensors to find a precise value for the color of a solution so I can determine it’s nutrient density. Munsell color test is the official color metric for soil research. I am now researching ways to use RGB LEDs and photosensor to test color of an object. A sensor capable of detecting color could be used for both bench-top NPK tests and Munsell color testing.

App dev thoughts

Im slowly coming to the conclusion that the android platform is a tinkerer’s dream and that there are hardly any restrictions to get in your way (iOS I’m looking at you). I’ve spent the week continuing to learn the ways of java and android studio but have started to shift my thoughts to how to implement and set up the new $FIELDSCIENCE app. Through exploring the source code and the app itself I know the general nature of what is needed (i.e., ambiance, temp, notepad, etc) in the app and brain storming on new ways to implement them as well as taking note on the things I like from the original app. Things feel like they are starting to get rolling!

Yoctopuce and Bluetooth

I’ve been doing some research on Yoctopuce and using Bluetooth to communicate with devices, as opposed to USB communication. I could not find any information on using yocto devices with bluetooth. The only official available options are Wifi, USB or Ethernet. This is saddening. Our Yocto ambiance platform is really top-notch, but we need to get rid of cables. They are just not practical. They unplug randomly and we will lose a lot of data in that time. Bluetooth is the only practical option.

I’ve been looking into Arduino sensors on Sparkfun.com and Adafruit to see if we can get the same functionality (and precision) that we can with Yocto devices.

Gitlab, and archiving!

FINALLY, we have a stable Git environment. On hopper (cluster.earlham.edu) I’ve set-up something called Gitlab. Gitlab is an open source git repo hosting environment. For those of you that are familiar with Github, Gitlab is like a self-hosted version of that. I chose Gitlab because it’s private (in Github, you have to pay for a premium membership to have private repositories) and it’s cleaner and has better access control than just doing it through a user’s directory on the machine.

You can see the Gitlab setup here – Earlham Gitlab. There’s a group called field-science where we are going to host all our code. I’ve already created projects for our Android work. The old android code is now in the archive-android project. This means that it is read-only! Yay! Starting fresh for Android $FIELDSCIENCE app. There’s a new project called FieldScience that we will use for the new app, which I’ve already created the shell for in Android Studio and pushed it to the Gitlab. Yay for cleaning and organization.

On Gitlab, we will store the visualization code, the database code, the arduino/sensor code. All the code! This makes it a lot easier when trying to find stuff later (our old Arduino code is all over the place).

Testing and nesting?

This week I started to look into what we have and what we need in more detail, as well as doing some more research. I tried out the Nikon range finder that the department has in different scenarios and from different distances. I also emailed the necessary person to check out the thermo camera that we have. I read some more papers as well on thermography in the natural sciences, especially in birds. Finally, I looked into different websites on Icelandic birds especially in Skalanes. The issue with this is that there is a lot of information on birds you can see in the area at different times of year, but not a whole lot of information on nesting birds, including which birds nest there and when they nest. The information must be somewhere but it will take a little more research. I looked into the Arctic Tern as well, which turns out to be a medium sized tern but hopefully that will not be an issue.

Information about arctic terns: http://www.allaboutbirds.org/guide/Arctic_Tern/lifehistory

What has changed?! $FIELDSCIENCE & YoctoLib

In our $FIELDSCIENCE Android application we use a library from Yoctopuce.com called YoctoLib which works with hardware purchased from them. We use Yoctopuce hardware in our Ambiance platform, and in the Ambiance skin in the app.

This library and code was working — recognizes the USB Yoctopuce devices that are plugged into the device and reading sensor data from them — the last time I used it (~July). Since then, something has gone wrong. The application will no longer read data from the sensors plugged in. I finally got it to at least recognize the device, but no data is being read. I suspect that this happened because of the move to Android Studio. Android Studio must have internally changed the way it uses APIs, which is what I am trying to figure out.

This further pushes me to believe that switching to Bluetooth to use the Yocto devices is necessary. Since the Yocto devices have to plug in via USB, each time I need to test the code, I have to upload the code to the Android device and then unplug the device from the computer so I can plug in the Yocto devices. This makes it difficult for debugging. If the device is plugged into the computer, Android Studio will constantly log messages from any application in real-time to the screen. Android keeps log messages even if the device is unplugged, and will load them once the device is plugged back in, but it’s all the messages (which is A LOT) from the test at once. It’s hard to go back through and figure out where something went wrong.