Unfortunately, we haven’t been keeping an overall summary each week, but that’s going to change now, from this week on.

In our meeting on September 28th, members came with a lot of progress and new information. Charlie and I had received an email from Oli (our contact at Skalanes in Iceland) with answers for our questions about specific projects. Oli is very interested in the Bird Nest Site Survey and the Sustainable energy project to power the ranch. He sent a link and information for the nearest weather station to Skalanes in Iceland to retrieve averages for different types of weather (wind, solar, etc.). We are giving this project thought as we progress, but no members are focusing on it as their one project right now.

Erin and Ben are the two members focusing on the Bird Nest Site Survey. Erin has been in contact with Bernard, a Scottish student that we met last time in Iceland, who has given her lot of information on all different types of birds in Skalanes. Erin is trying to research the exact nesting times for all of the birds at Skalanes, and which birds will be nesting when we go next summer. A question that needs to be answered is: what birds do we care about surveying? Only the endangered ones? Erin and Ben have also acquired the thermal camera and will begin testing it out. Oli noted that he is very into using a drone for the surveying, so as to avoid trampling through the bird’s nesting areas.

Oli also told us that the Archaeological Site projects will have to be on the back burner for now, because their license with the site has expired. We are going to ask him if we can do something that won’t involve actually being in the sites or digging up the sites to identify more sites. We’ve considered and research Archaeology Site Survey Techniques (Geophysical Surveying) and some of the options are Ground Penetrating Radar, Magnetometers, Electrical Resistance and Conductivity).

While at Skalanes last summer, we noticed that the internet was extremely slow and unreliable. Oli noted in his last message that the internet has gotten even worse. Nic mentioned using a balloon for internet. This may work. Google has been doing this for a little while, trying to give ‘Loon for All,’ where they are trying to give internet to areas that do not have it, under a project called Project Loon (Project Loon).

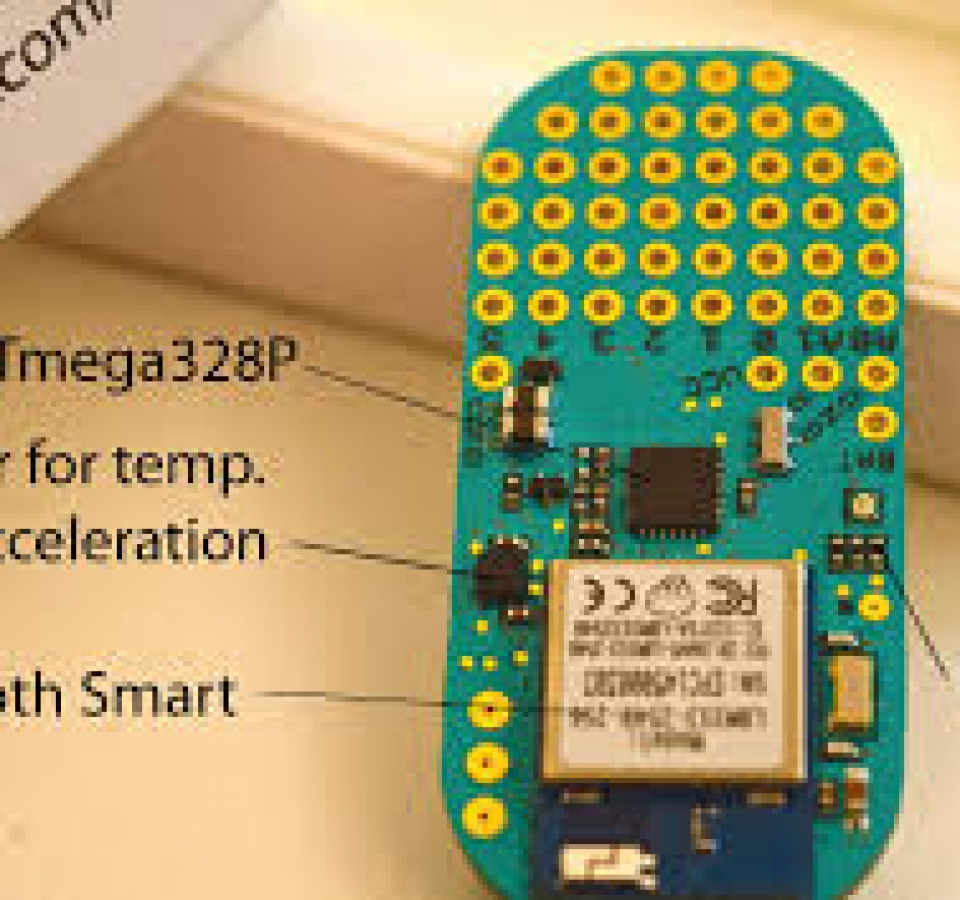

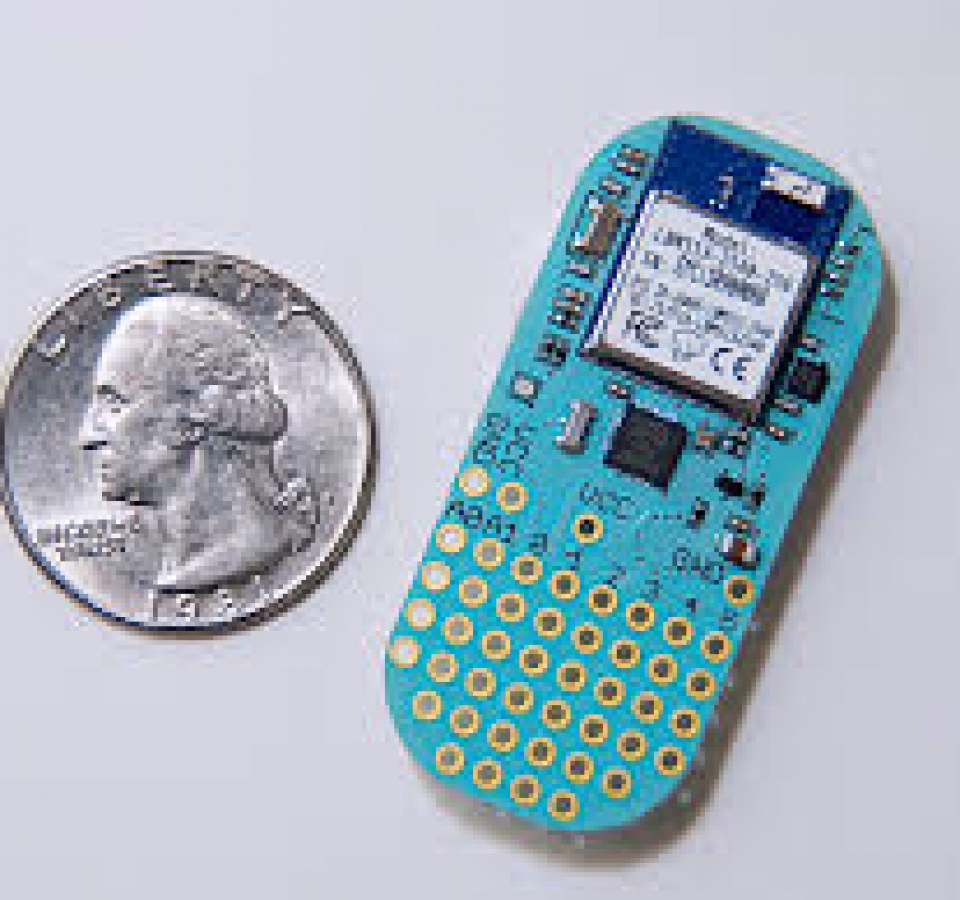

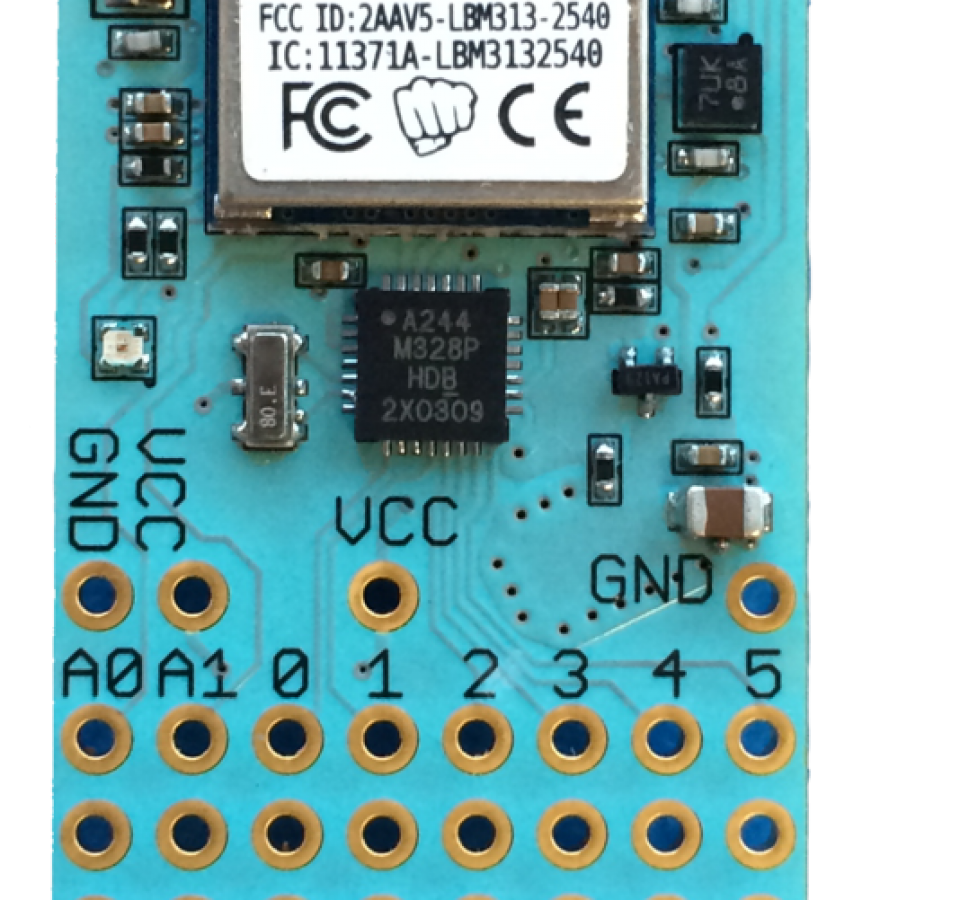

The soil platform/s is almost ready for prototype. Tara has decided that using the old soil platform in a better built casing, and Bluetooth is the option for the in-the-field platform. There are also three other platforms that are being researched that will be considered and used ‘on-the-bench’. They are the organic matter content, pH, and Munsell color. The organic matter content sensor is almost ready for prototype. Tara has come up with an idea that uses lasers and photoreceptors. A tube of soil will be placed in a stand, a laser will start at one end (top or bottom) and scan the the tube with a photoreceptor collecting light values on the other side of the tube. Organic matter in soil floats, so once the photoreceptor has a light value much lower than and previous value, then the organic matter has started. The photoreceptor should record that value until the organic matter has stopped, meaning the value is high again (a lot of light is going through). Tara has found some information on using Arduino with pH and will most likely follow those people’s tutorials. More information and research is still to be done about the Munsell color sensor.

The Field Science Android application is finally in the position to be worked on.Gitlab is all setup with our old repositories and we have all been able to pull from them. Our old code, Seshat, has been archived and is not allowed to be edited. This will force us to begin work on a new project, which Kristin has already created the shell for and pushed to the Gitlab repository. Nic, Kristin, and Charlie will decide a time to meet to discuss where to begin on the application, and what code to save from the old app.

The Ambiance platform has gotten some thought as well. We are no longer going to use Yoctopuce devices. They are more expensive than something like Arduino, do not work well with Bluetooth (which is something we desperately desire) and we have all agreed having similar types of sensors for each platform would be nice. More research is being done about which board and sensors to use.

Eamon has been able to extract the rows and columns from all of our CSV files and import them into a postgres database. He has been working with Flask for the Data Visualization project. There has been some debate on what is the correct language to use — php vs. javascript. Which one scales better? Eamon is waiting on more information from us about what exactly we want it to do. Some characteristics that we already know we want the data viz to have are: the ability to easily use it the night after sampling, and make sure we covered all the spots in the area that we wanted to cover, start out simple connect it to our project first, uses a static data model that we all decide on. Next week, the Data Viz will be the focus of our meeting after we briefly discuss the progress of the other projects.

Deeksha has been doing some work on the data model. She’s looked at our old data dictionary file and all of the CSVs from our different trips (Iceland 2013, Iceland 2014, and Nicaragua 2014) which are in wildly different formats and has figured out the exact data model with used for each of those trips and the differences between those models. She is using a database modeling tool to map out what we want the database to look like — primary keys, different tables, etc. and will then put them into postgres to have them all in the same place and format.