We recently purchased and received the UAV drone, a Phantom 3 Advanced. In choosing the drone we looked at a variety of specifications but the important ones was price, flight time, range, camera, and hack-ablility. The P3 Advanced hit right in the sweet spot.

Its priced cheap enough to not break the bank (Charlie’s eyes nearly popped out of his head at the cost of some systems… Charlie might be the bank in this analogy) while providing adequate if not superb performance in other areas. Its got a flight time of ~23 minutes and capable of speeds of roughly 16m/s (35mph) and ascent/descent speeds of 5m/s and 3m/s respectively. When hovering it has a vertical accuracy of +/- 0.1m and horizontal accuracy of +/- 1.5m (more on this with LIDAR onboard). Though no built in wind resistance (fancy speak for the ability to rapidly increasing speed to offset sudden gusts of wind) a pilot monitoring the system will be able to adapt for such things. According to data we have from the Icelandic met office, though windy they have rarely been stronger than 9m/s during the months we will be there.

In terms of range the advanced has one of the best combo systems. On board its got both GPS and GLONAS(read: Russian GPS) capabilities and under perfect conditions will be able to travel up to 5000meters (yeah, thats not an extra 0) away from the ground station. Its ceiling is set at 120m from ground station but capable of working anywhere to 6000meters above sea level. This means that we will be able to set up virtually any flight path for the drone to take within our 23 minute flight before a battery switch is needed. Side note/idea: This will probably be shot down but, because of our need for solar panels with the energy survey if the panels work well we might be able to have a remote charging station for discharged batteries.

The biggest obstacle weather/flight wise will be working rain. I am looking into possible “water resistance” techniques for the drone that are similar to what videographers have done when filming around waterfalls and during mist or light rains. The most common is coating the electronics in some sort of $ever_dry_product, but before we go spraying our drone with strange substances Id like to be absolutely sure of its success. (Big note that this is only a weather resistance in the same way that if you go swimming in a rain coat you will still get wet)

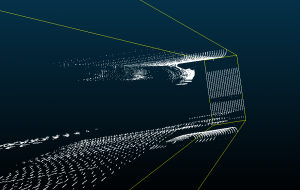

The Advanced’s camera is also a pretty neat piece of technology. First off, its TINY and lightweight but capably of 2.7K(30fps) resolution video and 12MP stills (translates to roughly 4000 x 3000p). The Advanced has the capability to store the stills as DNG RAW format so they retain as much digital info as possible which we can then harvest IR and other spectrums from in post processing. With images and video this high of quality we will be able to apply photogrammetry to measure distances between objects for mapping purposes natively. With a LIDAR sensor added in we should be able to apply these two together and through machine learning get something incredibly comprehensive.

Hack-ability is pretty important for our needs, given that we as a group could probably be called a group of science hackers. There are two flavours of this hack-ableness for the drone; software and hardware. Software is pretty straight forward – DJI is kind enough to provide a developers SDK that gives us the ability to customise and use the drones capabilities. Whats going to be important is finding how we can get this information into the FieldDay app and how thats going to look. Hardware is another thing entirely though. The Phantom series is slightly like Apple in that its a beautifully designed chassis thats not really meant to be played around with (in fact, google only returns hardware changes that deal with aesthetics). So, of course our plan is to stick a modular LIDAR system on the back of it which may require quite a few body modifications!

Looking forward I’ll be planning out how our ground station will work with the drone. This is most likely going to mean a laptop computer + tablet + controller + batteries in the field (My laptop is a 13″ macbook pro with 16GB RAM and a 256GB SSD). The waypoint guide system or “drone” part of the UAV will probably be easiest to do with a laptop and we can get some fancy features from having an actual computer near at hand. The controller itself will be near by for manual piloting, this uses a tablet as view finder/some controls. At highest quality video (2.7K resolution, 30fps) the advanced will record about 3GB per 10 minutes. Given the flight time will be ~23 minutes it’s probably going to be ~8 to 10GB per flight captured with video + lidar. Luckily on board the Advanced can hold a 64GB extreme speed SD Card to help with storage. The flight data will most likely be stored on laptop in the field and then transferred to backups once at basecamp. As part of the ground station the laptop is probably going to be running some form of mapping program for real time mappings (this will be looked at further down the road).

Lastly and most important: The drone needs a name. I’ll be taking suggestions. (Kristin says Bob is not an option fyi)

I’ve mentioned a LIDAR system several times, so please look at this post for further reading!