We took the LiDAR out for a spin on Friday. We had a beautiful (read: high tech) rig that consisted of Neil driving, Kellan holding the LiDAR out the sunroof, and Nic spread out across that back seat with the drone (Kia), laptop, and router, collecting data from the LiDAR. We re-learned that Kia doesn’t like having a lot of metal around, so our plan to collect GPS data was challenged, more on why GPS data is important below.

Note: Kia is the drone not that we are in a KIA car

After a lot of indoors practice with the LiDAR, we determined that the best way to set up Kia when in Iceland is to have a balanced rig with weight evenly distributed to the sides. The elements that we have to consider are the LiDAR, a wifi transmitter as well as battery packs for both. Neil and Charlie are working on getting these built in a reliable prototype.

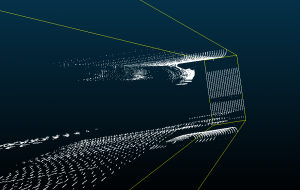

Our ultimate goal is to create point clouds from the LiDAR data based on GPS coordinates. During our test run on Friday we collected data but are still mapping this data based on time instead of GPS. Our plans for Monday are to pull the GPS data from our Friday test run from the Kia and align the GPS/telemetry data with the LiDAR data in a point cloud. This will allow us to correctly map the data in a point cloud. We can’t map the LiDAR data using time because the LiDAR spins faster than our time readings and time is linear and our flight most likely won’t be linear. If we were to create the point cloud using time, we would have a straight line where the data would be stretched out and repeated.